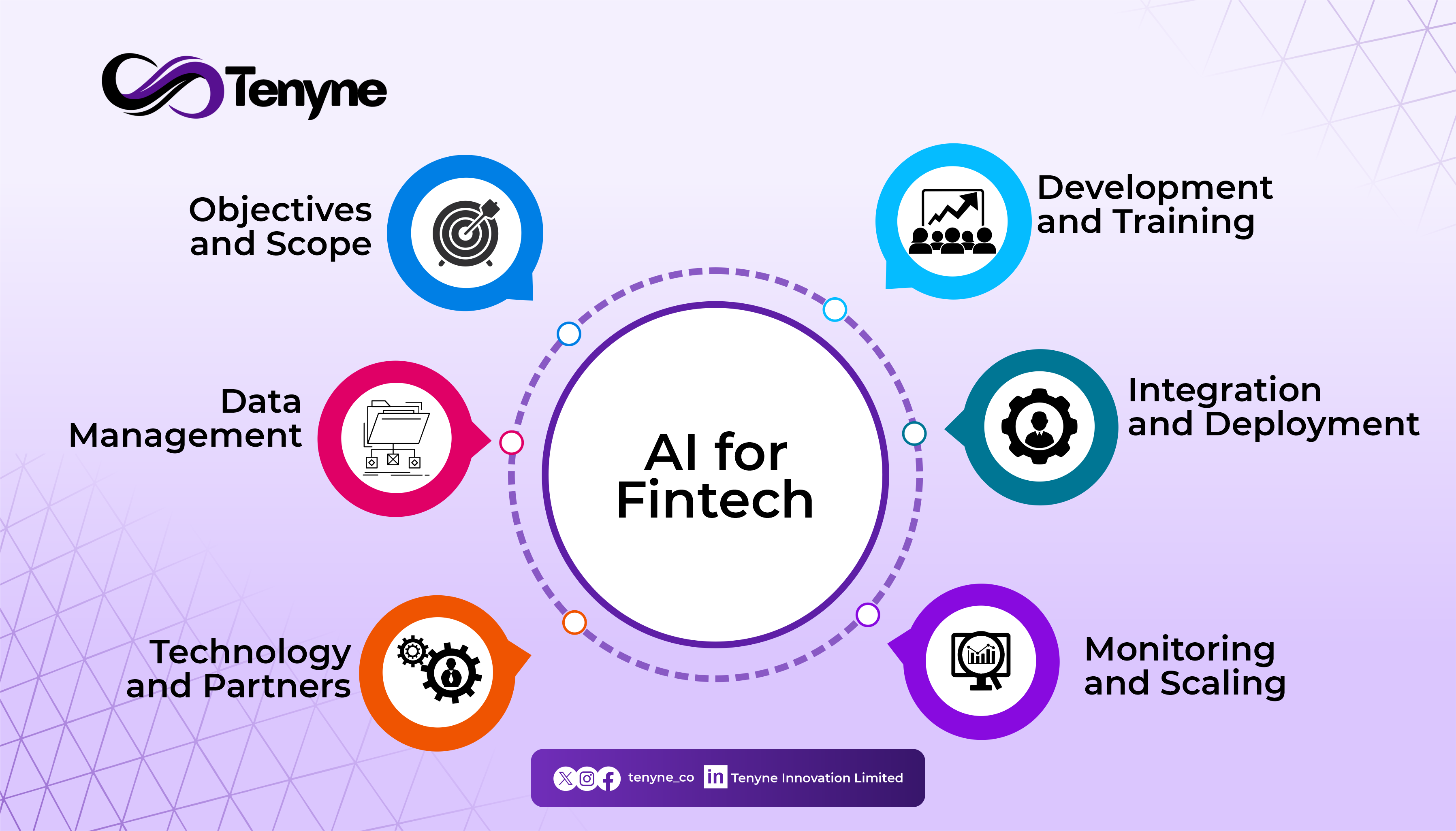

What steps are to be taken when integrating AI into Fintech?

AI can revolutionize fintech with better efficiency, personalization, and risk management — but successful adoption demands more than flipping a switch. Without high-quality data, clear goals, and strict compliance, integrating AI into fintech risks costly mistakes, biased outcomes, and loss of customer trust.

Jeff Arigo

So many blog contents have been written about the things to do when working on a project to integrate AI into fintech. In this blog content, we focus on the things to avoid when working on an AI for fintech project. We have refined the steps taken in integrating AI into fintech to six. Read below the six steps to be taken when integrating AI into fintech with a focus on things to avoid.

Objectives and Scopes

When scoping an AI-driven fintech product, avoid vague goals like “improve everything with AI” or locking in specific tools too early — these limit flexibility and blur focus. Stick to outcomes that drive core business value and avoid scope creep with non-essential features that delay launch and distract from user needs.

Base decisions on real, validated data — not assumptions about users, markets, or regulations. Don’t force AI where simpler solutions work better. And don’t treat compliance, ethics, or security as afterthoughts — bake them into the foundation to avoid costly gaps and build lasting trust.

Data Management

Inaccurate, incomplete, or siloed data kills model performance and leads to biased, unreliable insights. Weak data governance and ignoring compliance (like GDPR or CCPA) not only invites fines but also erodes trust. You need clear data lineage, scalable systems, and real-time visibility to stay agile in fast-moving markets.

Lax security, over-collected data, and outdated practices leave you exposed to breaches and bloat. Skipping monitoring or documentation opens the door to bias, drift, and failure. Build with transparency, test rigorously, and keep human oversight in the loop — especially when your tech impacts real lives and money.

Technology and Partners

Choose AI tech that’s proven, scalable, and built for real-time financial demands. Avoid black-box models that undermine trust and compliance. Look for modular systems — no vendor lock-in — and insist on strong data governance that meets GDPR, PCI-DSS, and other fintech standards.

Steer clear of generic AI vendors with no fintech expertise. You need partners who understand AML, fair lending, and regulatory nuance — who offer transparency, not black boxes. Prioritize long-term reliability, ethical AI practices, and a shared mindset on compliance and innovation.

Development and Training

Biased or incomplete training data can lead to unfair credit decisions and compliance issues. Skipping privacy laws like GDPR or using black-box models without explainability risks legal trouble and loss of trust. Avoid unethical shortcuts — AI used for predatory pricing or surveillance will backfire. Keep human oversight in the loop.

Models trained only on past data may fail in volatile markets. Without constant monitoring and retraining, performance will decline. Weak infrastructure and ignoring industry regulations (like AML or PCI-DSS) can stall deployment and attract penalties. Blend innovation with compliance to scale safely and smartly.

Integration and Deployment

When adding AI to your fintech stack, don’t overlook compliance or data governance. Financial systems must meet strict rules like GDPR and AML, so build in explainability, audit trails, and privacy from the start. Make sure your AI works with your existing systems — glitches in APIs or data flow can break real-time transactions. And always stress-test models in staging to avoid surprises during market volatility.

At launch, don’t "set and forget." AI models can drift as customer behavior changes, so real-time monitoring and alerting are a must. Train your team — especially analysts and compliance staff — to use the tools correctly. Keep deployments transparent with version control, rollback plans, and checks for bias to stay accountable and build trust.

Monitoring and Scaling

Fintech models must adapt to market shifts, regulatory changes, and evolving user behavior. Relying on static validation or ignoring real-time metrics like latency and accuracy can lead to silent failures and compliance risks. Explainability and auditability aren’t optional — they’re your defense in a regulated space.

Skipping load tests or infrastructure planning can cripple performance under high volume. Over-provisioning eats up budget, while rigid architectures kill agility. Scaling isn’t a one-off — retrain models regularly, embed feedback loops, and scale security with growth to avoid costly surprises.

Always Avoid Pitfalls when Integrating AI into Fintech

Integrating AI into fintech can unlock massive value — but only if you avoid common pitfalls like ignoring data drift, rushing scaling, or overlooking compliance and security. Stay sharp, build smart, and keep your systems resilient. For more insights on doing AI right in fintech, follow Tenyne, Inc. on social media.